Do you have an archive of content that is difficult to access and reuse? Converting legacy content to XML offers a wide range of benefits, including increased operational efficiency, opportunities to create new products and generate new revenue, the facilitation of interoperability and data sharing, and enhanced search and discovery.

In this recorded webinar, Megan Farrell from Innodata and Chris Hausler from Typefi discuss a range of considerations you should take into account if you’re looking to convert your legacy content to XML.

If you’d like to learn more about anything in this webinar or if you’d simply like to have a chat about your publishing needs, don’t hesitate to contact our Business Development team.

Contents

- Introduction to content conversion (00:38)

- Content conversion benefits and risks (02:17)

- Best practices for a successful content conversion project (04:15)

- Asset inventory: Volume, asset type, priority (04:53)

- Resources (08:00)

- Schedules and timelines (09:46)

- Quality (12:08)

- Conversion+ (14:44)

- Conclusion (16:06)

- About the presenters

Transcript

CHRIS HAUSLER: Hi, this is Chris Hausler, Director of Business Development with Typefi. Today’s webinar is Content conversion: The gateway to smart content.

Today we have Megan Farrell from Innodata presenting. She’s an account director with Innodata, has been so for seven years, and has been in the technology industry for 20 years, so has a wealth of experience working with a number of clients. She has a lot of experience in helping customers get value out of their content.

I’m going to turn it over to Megan Farrell right now for the presentation.

Introduction to content conversion

MEGAN FARRELL: Thanks, Chris, I’m very happy to be here. I have worked with numerous clients to streamline their workflow, and it always begins with content conversion.

For content to be valuable, it has to be engaging, accurate, and accessible. Everyone has staff and budget restrictions, but still need to deliver fresh, relevant content cost-efficiently and on a deadline.

Smart content is the answer.

Now, the core principle behind smart content is that content can be personalised for and by your clients, which will engage and retain them much better than a one-size-fits-all strategy. Smart content can create tangible value for both your customers and within your organisation.

So, let’s discuss how we’re going to get to smart content.

Publishers create copious amounts of content. However, the data might be sitting in a repository where it’s not accessible or easy to use. So, to monetise your archives, they need to be converted into formats that support a discovery and reuse strategy.

To support such a strategy, what I want to share today is an overview of what you need to know before beginning an archive content conversion. We’re going to review benefits and best practices. I’m going to address why, how, when and who needs to be considered in your plan.

Now, conversion is not a glamorous project, but can provide a huge impact to your operations. You’ve already invested in the content development. You should continue to reap the dividends of that investment.

Content conversion benefits and risks

There are numerous benefits associated with converting to XML.

Organisations can see immediate returns in operational efficiencies through automation. In current projects, we’ve seen client case studies where over 50% of the pre-XML production tasks are manual, and these tasks are made redundant by XML-based tool sets and automation.

Using XML, you increase return on investment on content development. You develop content once and repurpose and resell it again and again. Examples include customised content collections, new products and platforms, or expanding into new markets and territories.

With XML, your content can be ingested directly into your client’s processes and systems instead of remaining in a printed document sitting on a shelf or a PDF in an email. By embedding your content in your client’s workflows, you create more value for your client.

Search engines can find XML content more efficiently, increase search and discovery capabilities so that web crawlers and Google can find you and highlight you in searches. Consumers can’t find your content if they don’t know it exists.

And there’s inherent risks with not keeping up with the technology. If you don’t make the transition because you fear the cost or pushback from staff, there are downsides.

Interoperability is expected by the marketplace. Customers and partners need content that’s easy to use and deploy within their own systems and workflows, and you don’t want to be viewed as a commodity or nice to have.

Customers’ price sensitivity has increased, and all brands are under pressure to lower their price points. You have to differentiate your offerings. Content needs to be delivered in a way that’s both impactful and easy for the client to use.

Best practices for a successful content conversion project

So what I’d like to do at a high level is share with you what you need to know before converting legacy content to XML. My goal is to help you build your roadmap for a successful conversion program.

Now, I get asked all the time how much conversion will cost. Before that can be answered, there’s four areas that must be assessed and defined.

- How much content do you have?

- How are you going to staff the project?

- What are your timelines? Do we have milestones or deadlines that you have to consider?

- What are the desired service levels?

How you answer these questions will derive your budget.

Asset inventory: Volume, asset type, priority

The first step to scope conversion is asset management. You need to determine what you have and its value to the business.

First, take a look at your volume. Organisations routinely underestimate how much content is in their archives, so begin by defining the sheer volume of your archives. Compile a complete accounting of all your data.

You can measure this several different ways: number of books, documents, journals, handbooks. Pages and kilobytes work best if you have it, because there’s less estimating of total volume, but these are all acceptable ways of calculating volume.

If you have scientific or technical content, there needs to be an additional accounting. Now, I’m talking about math formulas, tables, figures, images and graphics. All of these items are treated independently during conversion, and they all have their own associated costs.

CHRIS: Question, does a service provider expect you to know how many formulas and figures are in each and every document?

MEGAN: Well, yes and no.

If it’s an enormous archive, you know, you have 10,000 or 50,000 documents, we’re going to have to project, and how we recommend you do that is take large sample sets of different types of documents from different eras and identify how many images and formulas each document contains, and average it across the archives.

I do realise it’s not pragmatic to quantify every single formula that might be in 10,000 documents, but it’s so important for budgeting to account for both pages and technical items because all of them have an associated cost. That’s a great question.

The next step is you need to identify formats of your legacy assets. Are they print, PDF image, PDF text, Microsoft Word? Most recently we had a project where they had old legacy microfiche.

CHRIS: So if you have options, what file formats are most desirable?

MEGAN: My engineering team is always happy with source files that are Microsoft Word documents. Structured Microsoft Word documents, they convert the cleanest.

PDF normal extracts text very well. PDF image will need OCR (Optical Character Recognition) or scanning, and that typically needs some level of manual cleanup. But whatever you have, a service provider’s going to want to see every version and every source that you have.

So, once you have your asset types and you know the magnitude of your archives, the next item is you need to prioritise what you have.

Some of your content can be reused and repurposed for new products, while other content is obsolete, so the assets need to be ranked based on market relevancy and business value.

Then you can schedule high value assets for conversion first—and remember, you don’t have to convert everything.

So these are your three pillars of asset management.

Resources

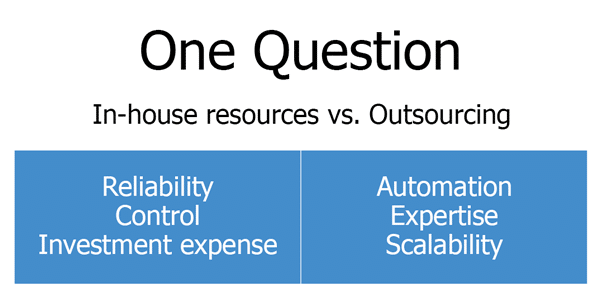

Then you can move on to how you’re going to staff the project, and with that comes one big question. Do you use in-house resources or do you partner?

There is no one right answer. You have to make an honest assessment of your business needs. There’s benefits to both.

There are benefits to using in-house resources. You have reliability. You have the reliability of known employees and you can develop new core competencies. You train your employees and then you have in-house domain expertise to convert content.

Control is another benefit. You have complete oversight and control over how and when the program is implemented.

The question is: ‘Is conversion an expertise that will benefit your business long-term?’ Do you invest in tech and training programs to build expertise for what is typically a one-time initiative?

So there are benefits to outsourcing. A partner will have experience and domain expertise gained by years of converting millions of pages to XML. Partners will have processes, workflows, and technologies already implemented. Partners can scale if you need to speed up the pace.

There’s also flexibility. Your in-house resources remain available to you to focus on high value, customer-facing activities. And then there’s cost. Partnering can be cheaper through automation and favourable offshore rates.

It’s not all black and white. Some organisations implement a hybrid approach. Publishers can designate two or three analysts to support project management, file management, and automating tasks, while the partner will manage the actual data conversion.

There are different ways that you can handle this. You just have to assess what’s best for your business.

Assuming you choose outsourcing, the next two slides will help complete the roadmap with a partner.

Schedules and timelines

You’re going to need to schedule when and how you work with the partner. At the very least, a schedule’s going to include the following deployment.

Deployment:

- Configuration: Week 1

- Sample validation: Weeks 2–3

- Production: Week 4–TBD

Your partner is going to need to configure their tool sets to ingest and convert content with the desired DTD, so be ready to share a large and varied sampling of your content.

Then they’ll need to test their workflows and scripts, so the first one to two weeks, you’re going to receive daily samples. During this time it is vital you have in-house resources reviewing the samples and providing real time feedback.

I will not sugarcoat it, this is tedious work. However, the program’s accuracy and quality are dependent upon properly configuring the conversion tools. And then once you get samples that are consistent, and that’s going take up to about week four, that’s when production will begin.

CHRIS: So, what is the best schedule to follow?

MEGAN: The organisation has to be honest about what they can handle. You cannot fall behind your partner’s schedule. Here’s some points to consider.

Timeline considerations:

- 3–18 months

- Deliverables schedule is volume of pages divided equally on a weekly or monthly basis

- Client capacity

- Example: If client opts to convert 10,000 pages in 6 months, this equates to 416+ pages per week

Timelines can range anywhere from 12 weeks to 18 months.

Once production begins, it’s just simple math. You know, it’s your archive pages divided by how many weeks are in that schedule. So a publisher has to be very realistic about how many pages they can audit once they receive these deliverables.

For instance, Innodata, we hold processed content for two weeks. So if I use the example in the slide, where 400 pages a week are delivered, we’re always 800 pages ahead of this client.

Now, if a client finds a flaw in their conversion rules or something they had not considered, and now they want a different approach, you have to go back and reconvert your data. So if you are months behind or out of warranty, these mistakes can get expensive, because you have to reconvert your content.

So you have to staff the program to stay even with your partner, and then you can just make the corrections quickly and painlessly. It’s a great question, it’s a really important point. You have to stay even, on pace with your partner.

Quality

If you partner, you also need to define a service level for quality.

What you need to know in assessing the quality levels of conversion that are offered in the market—first, be very wary if a vendor guarantees 100% quality. It’s not pragmatic given the typical state of legacy archives.

Archives are comprised of decades worth of content created by different authors, different committees, different software, under the direction of different directors, so with that many variables, archive conversion almost always requires a level of manual intervention and interpretation which can introduce errors, so 100% quality is not realistic.

The second important point is that decimal points can make vast difference in your deliverables. There are three standard quality level offerings. They all sound very similar, but you must understand the difference.

99.95% means five errors every 10,000 characters or one in 2,000. So, if you consider that every page is approximately 1,500 characters a page, this means you’re going to get one error on every page.

Now if you move up to 99.99%, that means one error in every 10,000 characters, or one error in every six and a half pages.

Still at a higher level, there’s 99.995%, and that means one error in 20,000 characters, which equates to one error every 13.3 pages.

It does follow that the higher the level of quality, the higher the pricing per page, but this can be viewed as budgeted dollars spent on clean converted content versus unplanned time and effort in-house staff would have to spend auditing and correcting an error on every page.

Now, when we’re talking about errors, not all errors are created equal. Is the error a simple typo or a missing space in the text, or is it an error in a formula that misleads your customers? Is it a fatal flaw to your product? So, think about categorisation as well.

With categorisation, and weighting of errors, you tighten service levels and enhance the quality of your deliverables.

So, we’ve gone through four points. After resolving asset management, asset type, schedule, resources and your quality levels, you now have the data that you need to build your conversion plan and your budget.

Conversion+

This is what, at Innodata, we like to call Conversion Plus, because there’s so many other data initiatives than just content conversion, and I’d like to highlight a few.

So how do you continue to improve and expand your content sets? What’s left?

Your data. Is it clean? A platform is only as good as its data. Sometimes a database can be holding a lot of out of date or obsolete information. It may need a good scrub. Do you have metadata that’s not updated consistently, or to be effective do you need multiple levels of new metadata added?

Let’s consider your front matter, all the new content being developed. With XML-based workflows, many of the remaining publishing tasks are repeatable, low-knowledge-based tasks. Is this something that you should outsource? Do you want a partner to format and layout in Typefi?

If you do that, your internal resources can be focused on high value activities like working with customers on new products and services.

Also think about expanding your data sets. In the quest for fresh new content, you can implement web scraping and content collecting initiatives. You can collect industry or regulatory news that augments and complements your existing data.

These are just a few next steps to think about to improve and expand your content offerings.

Conclusion

All right, that’s all from me today, Chris. I’m happy to talk more in detail or answer any questions through phone or email. But that’s all from us today. Thank you very much.

CHRIS: Thanks, Megan, great job, and please feel free to reach out to Megan if you have any questions about your content conversion needs. Thanks, and have a great day.

Megan Farrell

Director, Strategic Accounts | Innodata

Innodata is a leading provider of business process, technology and consulting services, as well as products and solutions that help clients create, manage, use, and distribute digital information. Innodata Consulting helps organisations migrate from traditional print/PDF to XML workflows, while Innodata Editorial Services supports end-to-end publishing operations.

Since joining Innodata in 2010, Megan’s responsibilities for global accounts include business development, design and implementation of new programs, business process analysis, and risk and issue management.

Chris Hausler

Business Development Director (US) | Typefi

Chris has over 20 years’ experience in improving organisational processes through technology, including a decade working with publishers to define and deliver solutions that dramatically improve the way they publish content. He is always excited about the opportunities offered by new technologies.

Chris holds a Bachelor of Arts (Economics) from the University of Richmond, and a Masters of Business Administration from Widener University.